The End of Hand-Crafted Systems

My research pursues a fundamental shift: self-designing data and AI systems. By discovering the alphabets and grammars that govern system architectures, we enable machines—not humans—to invent entirely new systems.

The north star: helping bring the full power of data and AI to every scientist, engineer, and decision-maker.

A Design Space Beyond Human Reach

The AI revolution is transforming every field and industry, driving unprecedented demand for data-centric computation. As new AI and data applications, data types, hardware platforms, and workloads appear faster than ever, the backbone systems that power this revolution have become the bottleneck.

Training a new model, solving an end-to-end enterprise use case or scientific inquiry depends on how fast we can build tailored systems and execute AI experiments and workflows.

Yet a single system architecture faces a design space larger than 10100 alternatives. Today, we still cling to a handful of "good" templates, each requiring years of manual design and tuning—and each suited to only a narrow set of scenarios. It is time to abandon this artisanal practice.

Alphabets, Grammars, Calculators

The insight: model the design space of systems as an alphabet of low-level design primitives, and whole architectures as sentences in a grammar over that alphabet. Systems calculators can then synthesize fresh blueprints on demand that never existed before.

The Alphabet

Decompose systems into their fundamental design atoms—the smallest decisions that shape how data is laid out, accessed, and processed.

The Grammar

Define rules for how primitives combine into coherent architectures, from model training pipelines to full data and inference engines.

The Calculator

Build math- and ML-driven algorithms that navigate this design space, finding optimal designs for specific workloads, hardware, and constraints.

From Theory to Systems

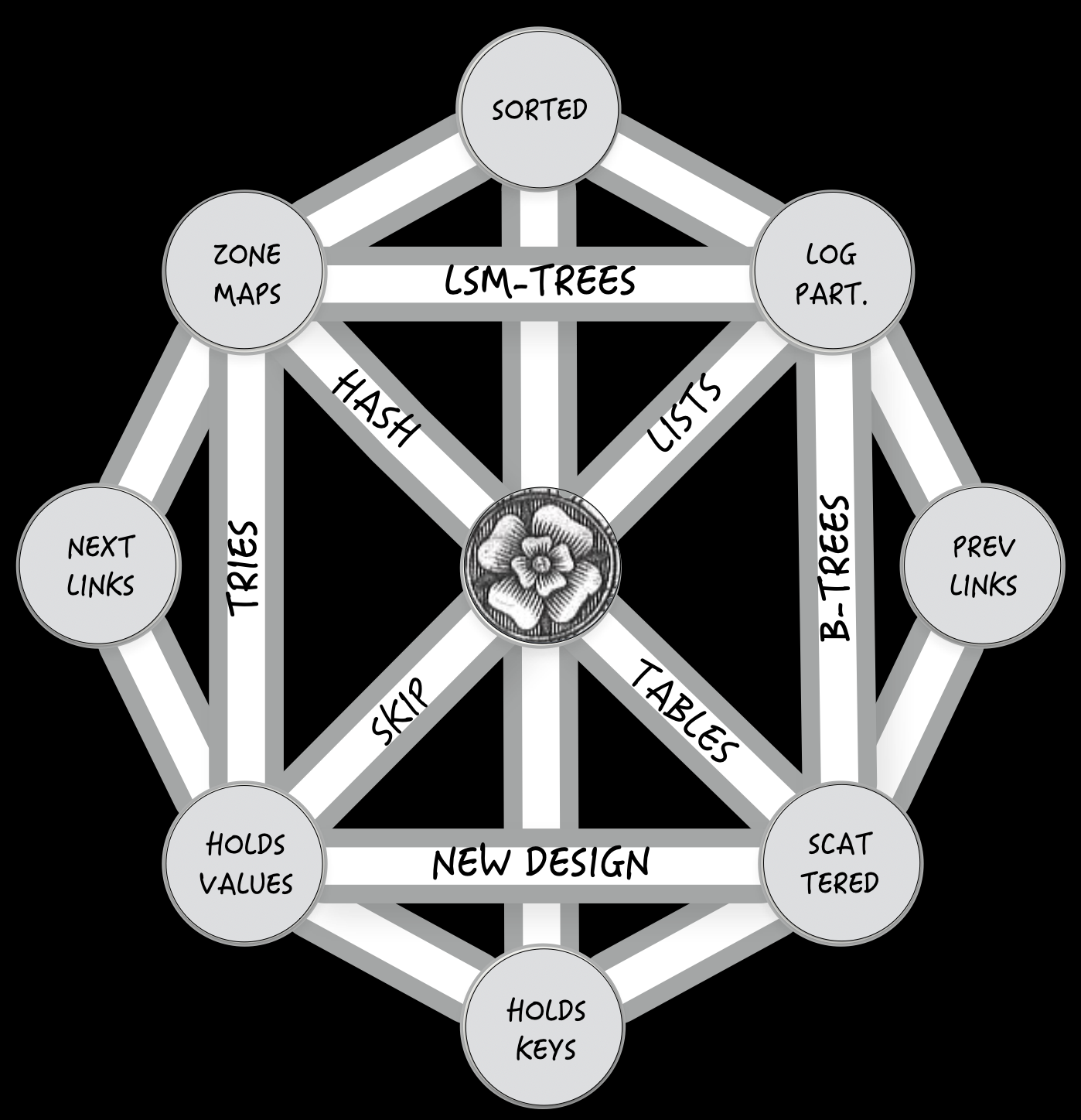

Data structures are one of the most fundamental elements of computer science. Roughly 5000 data structures have been invented/published since the dawn of computer science. By capturing the first principles of data layout—how nodes organize data and relate to each other—the Data Calculator invents, codes and explores trillions of previously unknown data structure variants. The periodic table of data structures gives a high level summary of this work.

NoSQL systems power AI, Blockchain, Analytics and so much more. Cosine and Limousine generate automatically novel NoSQL stores running up to three orders of magnitude faster than today's best deployments. Designs spans from LSM-trees to B-trees to hash tables—and trillions of hybrids that exist nowhere in literature or industry.

Today Image AI is primarily driven by JPEG storage, but JPEG was created for human eyes decades ago. Images today are seen by algorithms... The Image Calculator synthesizes a massive design space for image storage and invents new designs tailored for neural networks. By optimizing storage and neural network design together, we can achieve order-of-magnitude speedups in end-to-end vision pipelines.

Training very large models is slow, expensive and error-prone governed by extremely complex and ever evolving algorithms. LegoAI creates a massive design space and can invent and implement entirely new training algorithms instantly given a neural network, a performance goal and hardware constraints. The novel distributed-training algorithms extract every flop and byte from modern accelerators, automatically adapting to hardware topology and model architecture. LegoAI has been productized into TorchTitan and ships with PyTorch.

Inspiration

Why Calculators and the Dream of Leibniz

The dream of automated reasoning is as old as science itself. In 1666, Gottfried Wilhelm Leibniz completed his doctoral dissertation with a radical vision: a calculus ratiocinator, a universal machine that could derive all truths from a small alphabet of primitive concepts and their combinations. He imagined that disputes could be settled not by argument but by calculation.

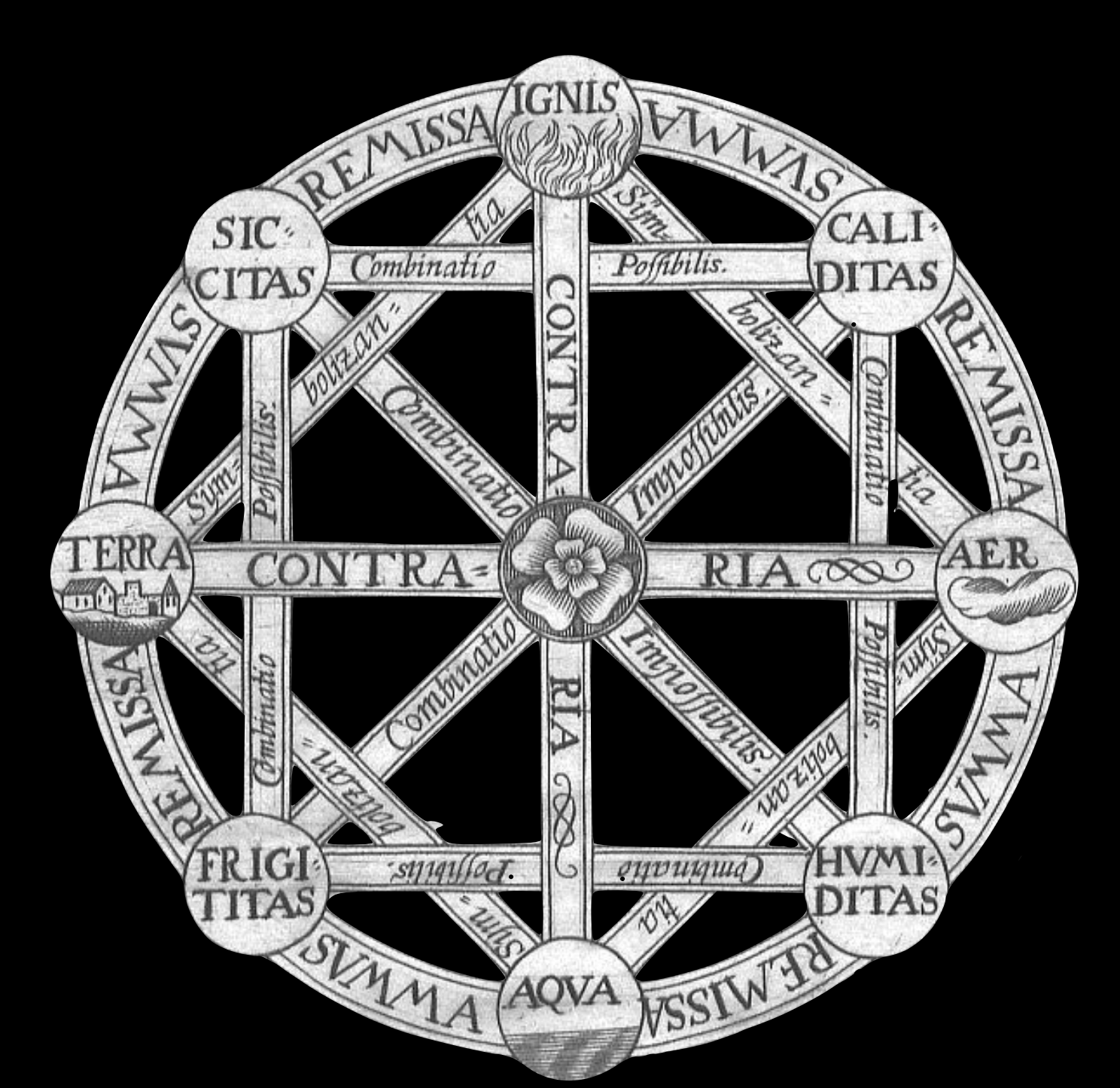

Leibniz's De Arte Combinatoria

The original diagram from Leibniz's dissertation depicting a combinatorial wheel of primitive concepts that generate knowledge through systematic combination.

The Data Calculator

Our realization of Leibniz's vision for data systems: design primitives that combine to synthesize optimal data structures for any workload. We now apply this across the full AI stack.

"Let us calculate."— Gottfried Wilhelm Leibniz

Influences

My work has been inspired by numerous computer science pioneers. Beyond my mentors Martin Kersten and Anastassia Ailamaki, the self-designing systems vision draws from foundational ideas across decades of research: S. Bing Yao's early work on system modeling and storage advisors, Joe Hellerstein's extensible indexing, Don Batory's principles of modular system synthesis, and Stefan Manegold's model synthesis for hardware-conscious algorithms. Mike Franklin's work on discovering new algorithms through taxonomies of design principles follows the same philosophy we now apply to entire system architectures.

Machines Write the Sentences.

Humans Ask Deeper Questions.

These results signal a future in which systems research increasingly focuses on crafting richer alphabets and grammars while machines write the sentences—freeing designers and researchers to pursue more profound questions.

Practitioners will dial in cost, latency, and accuracy with surgical precision.

Expanding the Grammar of Intelligence

Building on the foundations of self-designing systems, we are now extending these principles to the full stack of modern AI infrastructure.

RAG Agents

Applying self-designing principles to retrieval-augmented generation, enabling systems that automatically synthesize optimal retrieval strategies, index structures, and agent orchestration patterns.

Managing Context

Developing grammars for context management that allow systems to self-design how they store, compress, retrieve, and reason over long-range dependencies so we can apply AI to ever more complex problems.

Large Model Compilers

Creating compilers that transform model specifications into optimized execution plans, automatically navigating the vast design space of hardware mappings, parallelization strategies, and memory hierarchies.

Model Fine-Tuning

Extending the calculator paradigm to model adaptation, synthesizing optimal fine-tuning recipes by reasoning over the design space of data selection, parameter-efficient methods, and training dynamics.

Origins: Database Cracking

Systems That Learn from Their Workload

The ideas behind self-designing systems trace back to my PhD work on Database Cracking with my amazing advisors Martin Kersten and Stefan Manegold—a paradigm where data systems continuously adapt their physical storage layout in response to the queries they receive.

Rather than requiring administrators to manually create indexes upfront, cracking systems treat each query as an opportunity to incrementally reorganize data. Over time, the storage layout converges to one that is perfectly tailored to the actual workload—adapting to data properties, query patterns, and hardware characteristics.

Self-designing systems take this philosophy to its logical extreme: if a system can learn to optimize its storage layout, why not learn to optimize its entire architecture?

Deep Dives

Students

Research Group

Alumni & Placement

PhD

- Brian Hentschel → Google Research

- Sanket Purandare → Meta, PyTorch

- Lukas Maas → Microsoft Research

- Abdul Wasay → Intel Research

- Michael Kester → Vertica

- Wilson Qin → 2040 Labs

Postdoctoral Researchers

- Manos Athanassoulis → Professor, Boston University

- Niv Dayan → Professor, University of Toronto

- Siqiang Luo → Professor, NTU Singapore

- Konstantinos Zoumpatianos → Snowflake

- Hao Jiang → Databricks

- Subarna Chatterjee → DataStax

Current Researchers

PhD

- Milad Rezaei Hajidehi

- Konstantinos Kopsinis

Postdoctoral Researchers

- Qitong Wang

- Utku Sirin

Funding

Research Sponsors

Stratos Idreos

I am a Professor at Harvard's John A. Paulson School of Engineering and Applied Sciences and Faculty Co-Director of the Harvard Data Science Initiative. I lead DASlab, the Harvard Data and AI Systems Laboratory, where my research pursues a "grammar of systems"—enabling machines to design and tune systems architectures that are tailored to their context, faster, and more scalable.

Before Harvard, I was a researcher at CWI Amsterdam and earned my PhD from the University of Amsterdam. I have co-chaired ACM SIGMOD 2021 and IEEE ICDE 2022, co-founded the ACM/IMS Journal of Data Science, and served as chair of the ACM SoCC Steering Committee.